This tutorial implements a polyphonic sine wave synthesiser that responds to MIDI input. This makes use of the Synthesiser class and related classes.

Level: Intermediate

Platforms: Windows, macOS, Linux

Classes: Synthesiser, SynthesiserVoice, SynthesiserSound, AudioSource, MidiMessageCollector

Download the demo project for this tutorial here: PIP | ZIP. Unzip the project and open the first header file in the Projucer.

If you need help with this step, see Tutorial: Projucer Part 1: Getting started with the Projucer.

The demo project presents an on-screen keyboard that can be used to play a simple sine wave synthesiser.

Using keys on the computer keyboard the on-screen keyboard can be controlled (using keys A, S, D, F and so on to control musical notes C, D, E, F and so on). This allows you to play the synthesiser polyphonically.

This tutorial makes use of the JUCE Synthesiser class to implement a polyphonic synthesiser. This shows you all the basic elements needed to customise the synthesiser with your own sounds in your own applications. There are various classes needed to get this to work and in addition to our standard MainContentComponent class, these are:

SynthAudioSource: This implements a custom AudioSource class called SynthAudioSource, which contains the Synthesiser class itself. This outputs all of the audio from the synthesiser.SineWaveVoice: This is a custom SynthesiserVoice class called SineWaveVoice. A voice class renders one of the voices of the synthesiser mixing it with the other sounding voices in a Synthesiser object. A single instance of a voice class renders one voice.SineWaveSound: This contains a custom SynthesiserSound class called SineWaveSound. A sound class is effectively a description of the sound that can be created as a voice. For example, this may contain the sample data for a sampler voice or the wavetable data for a wavetable synthesiser.Our MainContentComponent class contains the following data members.

The synthAudioSource and keyboardComponent members are initialised in the MainContentComponent constructor.

See Tutorial: Handling MIDI events for more information on the MidiKeyboardComponent class.

In order that we can start playing the keyboard from the computer's keyboard we grab the keyboard focus just after the application starts. To do this we use a simple timer that fires after 400 ms:

The application uses the AudioAppComponent to set up a simple audio application (see Tutorial: Build a white noise generator for the most basic application). The three required pure virtual functions simply call the corresponding functions in our custom AudioSource class:

The SynthAudioSource class does a little more work:

getNextAudioBlock() function we pull buffers of MIDI data from the MidiKeyboardState object.SynthesiserSound objects can be shared between Synthesiser objects if you wish. The SynthesiserSound class is a type of ReferenceCountedObject class therefore the lifetime of SynthesiserSound objects is handled automatically.

YourSoundClass::Ptr variable for this memory management to work.Our sound class is very simple, it doesn't even need to contain any data. It just needs to report whether this sound should play on particular MIDI channels and specific notes or note ranges on that channel. In our simple case, it just returns true for both the appliesToNote() and appliesToChannel() functions. As mentioned above, the sound class might be where you would store data that is needed to create the sound (such as a wavetable).

The SineWaveVoice class is a bit more complex. It needs to maintain the state of one of the voices of the synthesiser. For our sine wave, we need these data members:

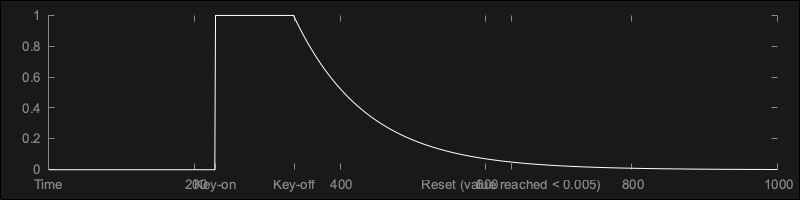

See Tutorial: Build a sine wave synthesiser for information on the first three. The tailOff member is used to give each voice a slightly softer release to its amplitude envelope. This gives each voice a slight fade out at the end rather than stopping abruptly.

The SynthesiserVoice::canPlaySound() function must be overriden to return whether the voice can play a sound. We could just return true in this case but our example illustrates how to use dynamic_cast to check the type of the sound class being passed in.

A voice is started by the owning synthesiser by calling our SynthesiserVoice::startNote() function, which we must override:

Again, most of this should be familar to your from Tutorial: Build a sine wave synthesiser. The tailOff value is set to zero at the start of each voice. We also use the velocity of the MIDI note-on event to control the level of the voice.

The SynthesiserVoice::renderNextBlock() function must be overriden to generate the audio.

currentSample value with the value alread at index startSample. This is because the synthesiser will be iterating over all of the voices. It is the responsibility of each voice to mix its output with the current contents of the buffer.tailOff value will be greater than zero. You can see the synthesis algorithm is similar.tailOff value is small we determine that the voice has ended. We must call the SynthesiserVoice::clearCurrentNote() function at this point so that the voice is reset and available to be reused.startSample argument. The synthesiser is very likely to call the renderNextBlock() function mid-way through one of its output blocks. This is because the notes may start on any sample. These start times are based on the timestamps of the MIDI data received.A voice is stopped by the owning synthersiser calling our SynthesiserVoice::stopNote() function, which we must override:

This may include velocity information from the MIDI note-off message, but in many cases we can ignore this. We may be being asked to stop the voice immediately in which case we call the the SynthesiserVoice::clearCurrentNote() function straight away. Under normal circumstances the synthesiser will allow our voices to end naturally. In our case we have the simple tail-off envelope. We trigger our tail-off by setting our tailOff member to 1.0.

Let's add functionality to allow an external MIDI source to control our synthesiser in addition to the on-screen keyboard.

First add a MidiMessageCollector object as a member of the SynthAudioSource class. This provides somewhere that MIDI messages can be sent and that the SynthAudioSource class can use them:

In order to process the timestamps of the MIDI data the MidiMessageCollector class needs to know the audio sample rate. Set this in the SynthAudioSource::prepareToPlay() function [10]:

Then you can pull any MIDI messages for each block of audio using the MidiMessageCollector::removeNextBlockOfMessages() function [11]:

We'll need access to this MidiMessageCollector object from outside the SynthAudioSource class, so add an accessor to the SynthAudioSource class like this:

In our MainContentComponent class we'll add this MidiMessageCollector object as a MidiInputCallback object to our application's AudioDeviceManager object.

To present a list of MIDI input devices to user, we'll use some code from Tutorial: Handling MIDI events. Add some members to our MainContentComponent class:

Then add the following code to the MainContentComponent constructor.

Add the setMidiInput() function that is called in the code above:

Notice that we add the MidiMessageCollector object from our SynthAudioSource object as a MidiInputCallback object [13] for the specified MIDI input device. We also need to remove the previous MidiInputCallback object for the previously selected MIDI input device if the user changes the selected device using the combo-box [12].

We need to position this ComboBox object and adjust the position of the MidiKeyboardComponent object in our resized() function:

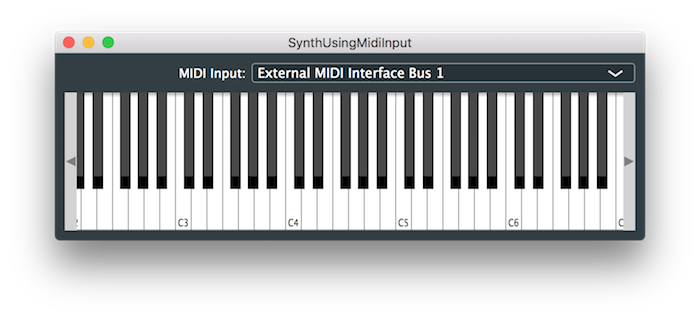

Run the application again and it should look something like this:

Of course, the devices listed will depend on your specific system configuration.

SynthUsingMidiInputTutorial_02.h file of the demo project.This tutorial has introduced the Synthesiser class. After reading this tutorial you should be able to: