Implement a realistic string model by means of physical modelling. Incorporate a delay line to create intricate echo patterns in the stereo sound field.

Level: Advanced

Platforms: Windows, macOS, Linux

Plugin Format: VST, AU, Standalone

Classes: dsp::ProcessorChain, dsp::Gain, dsp::Oscillator, dsp::Convolution, dsp::WaveShaper, dsp::Reverb

This tutorial leads on from Tutorial: Add distortion through waveshaping and convolution. If you haven't done so already, you should read that tutorial first.

Download the demo project for this tutorial here: PIP | ZIP. Unzip the project and open the first header file in the Projucer.

Resources folder into the generated Projucer project.If you need help with this step, see Tutorial: Projucer Part 1: Getting started with the Projucer.

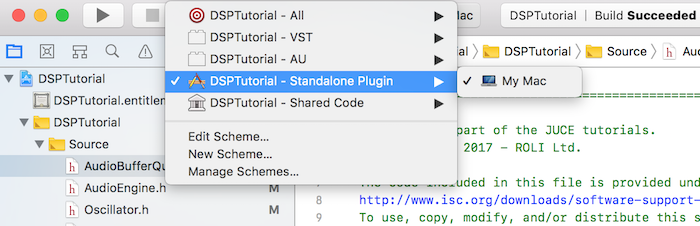

The project is conceived as a plugin but you can run it as a standalone application by selecting the proper deployment target in your IDE. In Xcode, you can change the target in the top left corner of the main window as shown in the following screenshot:

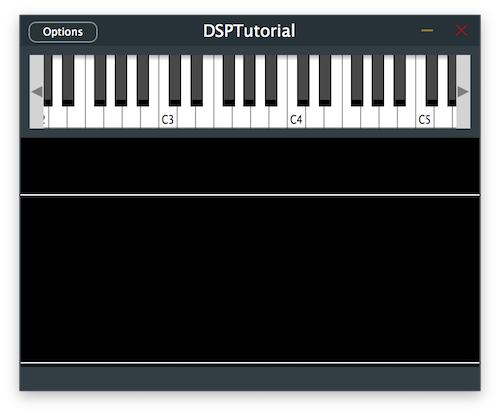

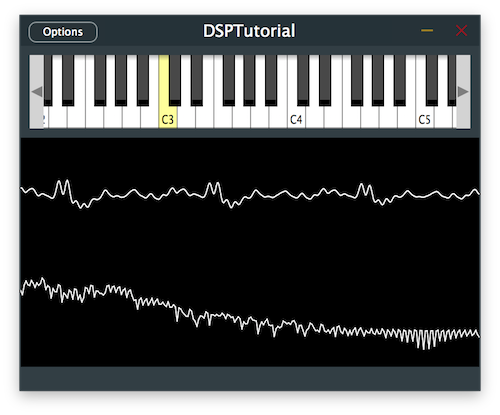

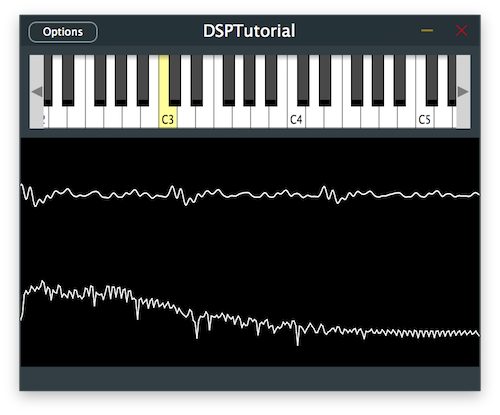

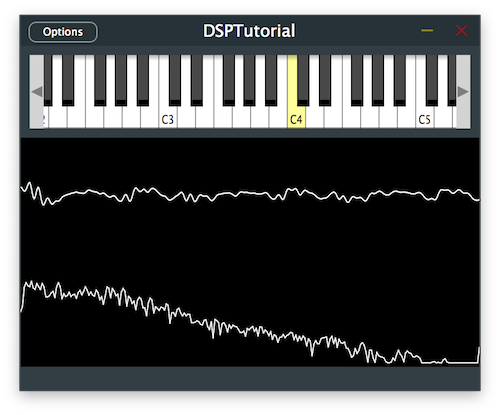

The demo project provides us with an on-screen MIDI keyboard in the top half of the plugin and a visual representation of the signal through an oscilloscope in the bottom half. Presently if a key is pressed, the plugin outputs a basic oscillator sound with some reverb and distortion added.

AudioEngine class.

In this tutorial we are introducing two new DSP concepts that allow for signal processing in different ways: Delay Lines and Physical Modelling.

Let's start by defining this DSP terminology.

A delay line is a fundamental tool in DSP that can be used in a wide array of applications including simulations of reverberation spaces, sound synthesis, filter implementation and classic time-based effects such as delays, choruses, phasers and flangers.

Fundamentally, a delay line is very simple and allows us to delay a certain signal by a number of samples. By using multiple delay lines and summing the seperate signals back together at different intervals, we can create the vast majority of digital signal processing out there.

In the analog domain, delay lines were implemented by introducing an actual physical extension such as a spring to delay the propagation of waves. In the digital domain, delay lines are often implemented using a data structure referred to as a circular buffer.

A circular buffer can be essentially implemented as an array where the index wraps around itself in order to create a circular data structure that matches the size of the sample buffer block. This allows us to store all the samples included in the previous block to be accessed in the current block, only to be overwritten by the current sample block for the next iteration.

In this tutorial, we will look into circular buffers as a way to implement delay lines.

Physical modelling denotes a sound synthesis method that relies on mathematical and physical models to generate sounds. Unlike other synthesis techniques, it does not make use of any samples as a starting point and focuses on how the sound is produced in the physical sense through the study of materials.

One of these models is called a digital waveguide which is based on the phyical model in which acoustic waves propagate through tubes or pipes. The reflection of these waves against boundaries can be efficiently computed using delay lines and as such many sounds of instruments such as strings can be generated using this model.

In a nutshell, the waveguide string model builds upon the notion that a vibrating string can be modelled using two waves that travel in opposite directions and bounce back at the two end points. The combination of these two waves summed together eventually simulates the ideal motion of a plucked string and these can be implemented using two delay lines: a forward and a backward delay line.

However, this ideal model of a string never comes to rest in its current state as damping is not taken into account. Thus when the waves change direction at the boundaries and their polarities are inversed we can incorporate a damping factor to reduce the displacement of the waves.

Other variables to consider in this model are where the plucking of the string should occur and where the sound of the vibrating string should be picked up from. We therefore have to integrate a plucking position from which the two waves will propagate and a pickup location just as you would listen to the sound from a specific location in space.

Last but not least, a natural phenomenon that occurs in physical strings is the faster decay of higher frequencies than lower frequencies. This can be easily integrated into our model by simply adding a low-pass filter at one end of the boundaries to simulate this discrepancy in decay times.

In this tutorial, we will implement this digital waveguide model by using delay lines to simulate plucked strings with waves that reflect at the boundaries of attached strings.

Let's start by implementing a simple delay line as a circular buffer using a vector.

In the DelayLine class, there are a couple of self-explanatory helper functions already defined to facilitate the implementation such as size() and resize() methods, a clear() function and a back() function that retrieves the least recent sample in the buffer.

We first implement the push() function which adds a new sample by overwriting the least recently added sample [1] and updates the least recent index variable by wrapping the index by the size of the circular buffer [2]:

Then we complete the get() function by returning the sample located at an offset specified by the function argument while making sure that the index wraps around the vector [3]. Notice here that we make sure the delay does not exceed the size of the buffer.

Next, we fill in the set() function by assigning the sample at an offset specified by the function argument while making sure that the index wraps around the vector [4]. Here again, we make sure the delay does not exceed the size of the buffer.

And that completes our implementation of a simple delay line.

With our basic delay line class implemented, let's incorporate a stereo delay effect into our signal chain.

In the Delay class, there are multiple parameters that can be tweaked to change the behaviour of our delay effect and these include delay times for individual channels, the maximum delay time allowed, the dry/wet level of the effect and the amount of feedback.

Using these parameters and the delay line implementation, we can create a wide range of delay effects as desired but let's start with the default parameters defined in the constructor:

These helper functions mainly set the corresponding member variables to store the parameters but some of them also require a resizing of data structures to accomodate the parameter changes.

One such case is the setMaxDelayTime() function defined below which calls the updateDelayLineSize() helper function [1]:

Complete the following function which ensures that the circular buffers of all the delay lines are large enough to accomodate any delay time up to the max delay time by resizing the vectors [2]:

Another noteworthy case is in the setDelayTime() function of individual channels where a parameter change causes a call to the updateDelayTime() helper function [3] as follows:

Implement this helper function that recalculates the delay times in samples for all the channels based on the new parameter change [4]:

In the reset() function, we reset the filters for each channel [5] which we will use in the next section of the tutorial and clear any old sample remaining in the delay lines [6]:

In the prepare() function, we make sure that the size of the delay lines [7] and the delay times in samples [8] are still correct in case the sample rate was changed between sample blocks and initialise the filters with a low-pass filter for now [9]:

Now let's deal with the process() function to actually implement the delay effect:

std::tanh(). The hyperbolic tangent function allows us to smoothly combine the two signals without clipping the summed sample and provides a natural decay.If we run this code after implementing the above changes in the Delay class, we should be able to hear the delay effect on the oscillator signal.

Most delay effects incorporate a filtering of the signal as it repeats and decays in order to provide a more realistic sound just as it occurs in nature. So let's apply some filtering to the delayed sound.

This can be achieved very easily by simply changing one line in the process() function of the Delay class as follows:

Here we simply call the processSample() function on the filter object by passing the delayed sample from the delay line [1].

Change the filter type to a high-pass filter in the prepare() function by swapping the coefficients and calling the makeFirstOrderHighPass() function [2] as shown here:

Running the program should give us a brighter delayed sound as the number of repeats increases.

Since we have conveniently implemented a delay line for our delay effect, we can use the same data structure to integrate a waveguide string model using the same class.

In the WaveguideString class, there are multiple parameters that can be tweaked to change the behaviour of our string model and these include the trigger position, the pickup position and the decay time for the damping of the strings.

Default parameters are defined in the constructor which in turn set the corresponding member variables as follows:

These helper functions also call the updateParameters() function which initialises various variables such as the size of the delay lines, the pickup indices relative to the delay lines, the trigger index relative to the forward delay line and the filter coefficients as well as the decay coefficient based on the decay time.

Add the implementation of this helper function as described below:

In the reset() function, we reset the delay lines to clear any old sample remaining:

In the prepare() function, we create a temporary audio block that will be used later for processing [6] and make sure that the parameters are still correct in case the sample rate was changed between sample blocks by calling the updateParameters() function again [7]:

To trigger the excitation of the string provoked by the plucking, we have to set the initial displacement of both waves represented by the two delay lines.

To do this in the trigger() function, iterate first between the samples contained from the start of the delay line to the index of the trigger position, calculate the values for each sample by mapping the indices to ascending values reaching half of the note velocity and assigning these to the delay lines in opposite directions [8]. Do the same for the samples contained between the index of the trigger position to the end of the delay line with descending values from half of the note velocity [9].

To generate all the samples in a buffer block, we declare a helper function that returns only one iteration of sample generation as follows:

back() function declared earlier.In the process() function, simply process all the samples in the buffer block by calling the processSample() helper function and assigning the values to the temporary block created earlier [15]. Then copy the samples to all channels in the audio block [16] and add the content of the temporary block into the output block along with the original content contained in the input block [17].

In the Voice class, add a WaveguideString processor to the processor chain [18] and add its corresponding index in the enum [19].

In the noteStarted() function, remove the line that sets the level of the oscillator as we will be using the waveguide string model to generate sounds. Retrieve a reference to the string model from the processor chain [20], set the fundamental frequency of the string to the frequency of the note played [21] and trigger the plucking by calling the trigger() function with the note velocity [22].

Let's run the program and see how it sounds like.

DSPDelayLineTutorial_02.h file of the demo project.In this tutorial, we have learnt how to implement a string model and a delay line. In particular, we have: