Create your own channel strip by learning how to daisy chain audio processors or plugins using an AudioProcessorGraph. Learn how to use the AudioProcessorGraph in both a plugin and standalone application context.

Level: Intermediate

Platforms: Windows, macOS, Linux

Classes: AudioProcessor, AudioProcessorPlayer, AudioProcessorGraph, AudioProcessorGraph::AudioGraphIOProcessor, AudioProcessorGraph::Node, AudioDeviceManager

Download the demo project for this tutorial here: PIP | ZIP. Unzip the project and open the first header file in the Projucer.

If you need help with this step, see Tutorial: Projucer Part 1: Getting started with the Projucer.

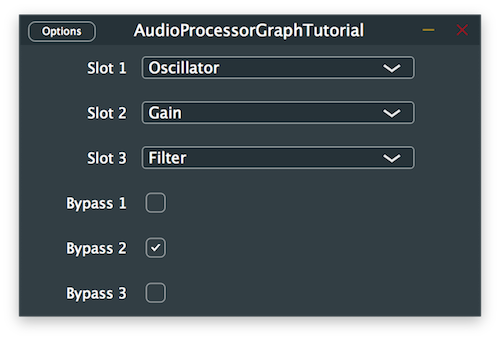

The demo project simulates a channel strip where different audio processors can be applied in series. There are three available slots that can be individually bypassed and three different processors including an oscillator, a gain control and a filter that can be chosen from. The plugin applies processing on incoming audio and propagates the modified signal to the output.

The AudioProcessorGraph is a special type of AudioProcessor that allows us to connect several AudioProcessor objects together as nodes in a graph and play back the result of the combined processing. In order to wire-up graph nodes together, we have to add connections between channels of nodes in the order we wish to process the audio signal.

The AudioProcessorGraph class also offers special node types for input and output handling of audio and midi signals within the graph. An example graph of the channel strip would look something like this when connected properly:

Let's start by setting up the main AudioProcessorGraph to receive incoming signals and send them back to the corresponding output unprocessed.

In order to reduce the character count for nested classes used frequently in this tutorial, we first declare a using for the AudioGraphIOProcessor class and the Node class in the TutorialProcessor class as follows:

Then we declare the following private member variables using the shortened version of class names like so:

Here we create pointers to the main AudioProcessorGraph as well as the input and output processor nodes which will be instantiated later on within the graph.

Next, in the TutorialProcessor contructor we set the default bus properties for the plugin and instantiate the main AudioProcessorGraph as shown here:

Since we are dealing with a plugin, we need to implement the isBusesLayoutSupported() callback to inform the plugin host or DAW about which channel sets we support. In this example we decide to only support mono-to-mono and stereo-to-stereo configurations like this:

For the TutorialProcessor to be able to process audio through the graph provided, we have to override the three main functions of the AudioProcessor class that perform signal processing namely the prepareToPlay(), releaseResources() and processBlock() functions and call the same respective functions on the AudioProcessorGraph.

Let's start with the prepareToPlay() function. First we inform the AudioProcessorGraph on the number of I/O channels, the sample rate and the number of samples per block by calling the setPlayConfigDetails() function like follows:

We then call the prepareToPlay() function on the AudioProcessorGraph with the same information and call the initialiseGraph() helper function which we define later on to create and connect the nodes in the graph.

The releaseResources() function is self-explanatory and simply calls the same function on the AudioProcessorGraph instance:

Finally in the processBlock() function, we clear the samples contained in any additional channels that may contain garbage data just in case and call the updateGraph() helper function later defined that will rebuild the graph if the channel strip configuration was changed. The processBlock() function is eventually called on the AudioProcessorGraph at the end of the function:

The initialiseGraph() function called earlier in the prepareToPlay() callback starts by clearing the AudioProcessorGraph of any nodes and connections that were previously present. This also takes care of deleting the corresponding AudioProcessor instances associated to the deleted nodes in the graph. We then proceed to instantiate the AudioGraphIOProcessor objects for the graph I/O and add the AudioProcessor objects as nodes in the graph.

We still have to add connections between the newly-created nodes in the graph to propagate the audio/midi data and this is performed with the following helper functions:

Here we call the addConnection() function on the AudioProcessorGraph instance by passing the source and destination nodes we wish to connect in the form of a Connection object. These require a nodeID and a channel index for building the appropriate connections and the whole process is repeated for all the required channels.

The same is performed on the midi I/O nodes with the exception of the channel index argument. Since the midi signals are not sent through regular audio channels, we have to supply a special channel index specified as an enum in the AudioProcessorGraph class.

At this stage of the tutorial, we should be able to hear the signal pass through the graph without being altered.

In this part of the tutorial, we create different processors that we can use within our channel strip plugin to alter the incoming audio signal. Feel free to create additional processors or customise the following ones to your taste.

In order to avoid repeated code for the different processors we want to create, let's start by declaring an AudioProcessor base class that will be inherited by the individual processors and override the necessary functions only once for simplicity's sake.

The first processor is a simple oscillator that generates a constant sine wave tone at 440Hz.

We derive the OscillatorProcessor class from the previously-defined ProcessorBase, override the getName() function to provide a meaningful name and declare a dsp::Oscillator object from the DSP module:

In the constructor, we set the frequency and the waveform of the oscillator by calling respectively the setFrequency() and initialise() functions on the dsp::Oscillator object as follows:

In the prepareToPlay() function, we create a dsp::ProcessSpec object to describe the sample rate and number of samples per block to the dsp::Oscillator object and pass the specifications by calling the prepare() function on it like so:

Next, in the processBlock() function we create a dsp::AudioBlock object from the AudioSampleBuffer passed as an argument and declare the processing context from it as a dsp::ProcessContextReplacing object that is subsequently passed to the process() function of the dsp::Oscillator object as shown here:

Finally, we can reset the state of the dsp::Oscillator object by overriding the reset() function of the AudioProcessor and calling the same function onto it:

We now have an oscillator that we can use in our channel strip plugin.

The second processor is a simple gain control that attenuates the incoming signal by -6dB.

We derive the GainProcessor class from the previously-defined ProcessorBase, override the getName() function to provide a meaningful name and declare a dsp::Gain object from the DSP module:

In the constructor, we set the gain in decibels of the gain control by calling the setGainDecibels() function on the dsp::Gain object as follows:

In the prepareToPlay() function, we create a dsp::ProcessSpec object to describe the sample rate, number of samples per block and number of channels to the dsp::Gain object and pass the specifications by calling the prepare() function on it like so:

Next, in the processBlock() function we create a dsp::AudioBlock object from the AudioSampleBuffer passed as an argument and declare the processing context from it as a dsp::ProcessContextReplacing object that is subsequently passed to the process() function of the dsp::Gain object as shown here:

Finally, we can reset the state of the dsp::Gain object by overriding the reset() function of the AudioProcessor and calling the same function onto it:

We now have a gain control that we can use in our channel strip plugin.

The third processor is a simple high pass filter that reduces the frequencies below 1kHz.

We derive the FilterProcessor class from the previously-defined ProcessorBase, override the getName() function to provide a meaningful name and declare a dsp::ProcessorDuplicator object from the DSP module. This allows us to use a mono processor of the dsp::IIR::Filter class and convert it into a multi-channel version by providing its shared state as a dsp::IIR::Coefficients class:

In the prepareToPlay() function, we first generate the coefficients used for the filter by using the makeHighPass() function and assign it as the shared processing state to the duplicator. We then create a dsp::ProcessSpec object to describe the sample rate, number of samples per block and number of channels to the dsp::ProcessorDuplicator object and pass the specifications by calling the prepare() function on it like so:

Next, in the processBlock() function we create a dsp::AudioBlock object from the AudioSampleBuffer passed as an argument and declare the processing context from it as a dsp::ProcessContextReplacing object that is subsequently passed to the process() function of the dsp::ProcessorDuplicator object as shown here:

Finally, we can reset the state of the dsp::ProcessorDuplicator object by overriding the reset() function of the AudioProcessor and calling the same function onto it:

We now have a filter that we can use in our channel strip plugin.

Now that we have implemented multiple processors that can be used within the AudioProcessorGraph, let's start connecting them together depending on the user selection.

In the TutorialProcessor class, we add three AudioParameterChoice and four AudioParameterBool pointers as private member variables to store the parameters chosen in the channel strip and their corresponding bypass states. We also declare node pointers to the three processor slots when later instantiated within the graph and provide the selectable choices as a StringArray for convenience.

Then in the constructor we can instantiate the audio parameters and call the addParameter() function to tell the AudioProcessor about which parameters should be available in the plugin.

This tutorial makes use of the GenericAudioProcessorEditor class, which automatically creates a ComboBox for each of the parameters in the plug-in's processor that is an AudioParameterChoice type and a ToggleButton for each AudioParameterBool type.

In the first part of the tutorial when setting up the AudioProcessorGraph, we noticed that we call the updateGraph() helper function in the processBlock() callback of the TutorialProcessor class. The purpose of this function is to update the graph by reinstantiating the proper AudioProcessor objects and nodes as well as reconnecting the graph depending on the current choices selected by the user so let's implement that helper function like this:

The function starts by declaring a local variable representing the state of the graph and whether it has changed since the last iteration of the audio block processing. It also creates arrays to facilitate iteration over the processor choices, bypass states and their corresponding nodes in the graph.

In the next part, we iterate over the three available processor slots and check the options that were selected for each of the AudioParameterChoice objects as follows:

hasChanged flag to true. Otherwise, the state has not changed and the graph does not need rebuilding.hasChanged flag to true.The next section is only performed if the state of the graph has changed and we start connecting the nodes as follows:

In the last section of the updateGraph() helper function, we deal with the bypass state of the processors by checking whether the slot is active and bypass the AudioProcessor if the check box is toggled. We also check whether to mute the input to avoid feedback loops when testing. Then, we assign back the newly-created nodes to their corresponding slots for the next iteration.

The plugin should now run by processing incoming audio through the loaded processors within the graph.

If you are interested in using the AudioProcessorGraph within a standalone app, this optional section will delve into this in detail.

First of all, we have to convert our main TutorialProcessor class into a subclass of Component instead of AudioProcessor. To match the naming convention of other JUCE GUI applications we also rename the class name to MainComponent as follows:

Main.cpp file follows the "GUI Application" template format.When creating a plugin, all the IO device management and playback functionalities are controlled by the host and therefore we don't need to worry about setting these up. However, in a standalone application we have to manage this ourselves. This is why we declare an AudioDeviceManager and an AudioProcessorPlayer as private member variables in the MainComponent class to allow communication between our AudioProcessorGraph and the audio IO devices available on the system.

The AudioDeviceManager is a convenient class that manages audio and midi devices on all platforms and the AudioProcessorPlayer allows for easy playback through an AudioProcessorGraph.

In the constructor, instead of initialising plugin parameters we create regular GUI components and initialise the AudioDeviceManager and the AudioProcessorPlayer like so:

Here we first initialise the device manager with the default audio device and two inputs and outputs each [1]. We then add the AudioProcessorPlayer as an audio callback to the device manager [2] and as a midi callback by using the default midi device [3]. After graph initialisation, we can set the AudioProcessorGraph as the processor to play by calling the setProcessor() function on the AudioProcessorPlayer [4].

Then in the destructor, we make sure to remove the AudioProcessorPlayer as an audio and midi callback on application shutdown.

Notice that unlike the plugin implementation, the AudioProcessorPlayer deals with processing the audio automatically and therefore it will take care of calling the prepareToPlay() and processBlock() functions on the AudioProcessorGraph for us.

However we still need to find a way to update the graph when the user changes parameters and we do so by deriving the MainComponent from the Timer class and overriding the timerCallback() function like so:

Finally, we modify the updateGraph() function to set the playback configuration details from the AudioProcessorGraph instead of the main AudioProcessor since the latter was replaced by the AudioProcessorPlayer in our standalone app scenario:

Your plugin should now run as an application after these changes.

AudioProcessorGraphTutorial_02.h file of the demo project.In this tutorial, we have learnt how to manipulate an AudioProcessorGraph to cascade the effects of plugins. In particular, we have: